Let’s Talk: Do You Need PCIe 5.0?

Let’s Talk: Do You Need PCIe 5.0?

Since last year we’ve seen flagship motherboard equipped with PCIe 5.0 touting crazy transfer speeds, and this year we are seeing PCIe 5.0 SSDs popping up here and there as well. However, that introduced some rather unpleasant side effects: heat, and cost.

If you have been paying attention to recent PCIe 5.0 product releases, you are probably familiar with this particular SSD (pictured above) with a tiny fan that produces not-so-tiny noises. Now to be fair – not all PCIe 5.0 SSDs come with a fan like this, but anything that requires passive cooling will certainly see a huge heatsink sitting on top of the SSD itself, like this one from GIGABYTE.

So, marketing hype aside – if you have a PCIe 3.0 and even a SATA SSD right now, do you need to jump on the PCIe 5.0 bandwagon right away? Short answer: no. For the long answer, keep reading.

Understanding PCIe

PCIe, or Peripheral Component Interconnect express, is the successor of the original PCI (and AGP for graphics) standard back in early 2000s. Simply put, this is a connection standard dedicated for anything that requires high amounts of bandwidth – think of it like USB, but on steroids. Most add-in hardware uses this slot and the standard is largely universal, you can see it in most PCs, workstations and even datacenters have them.

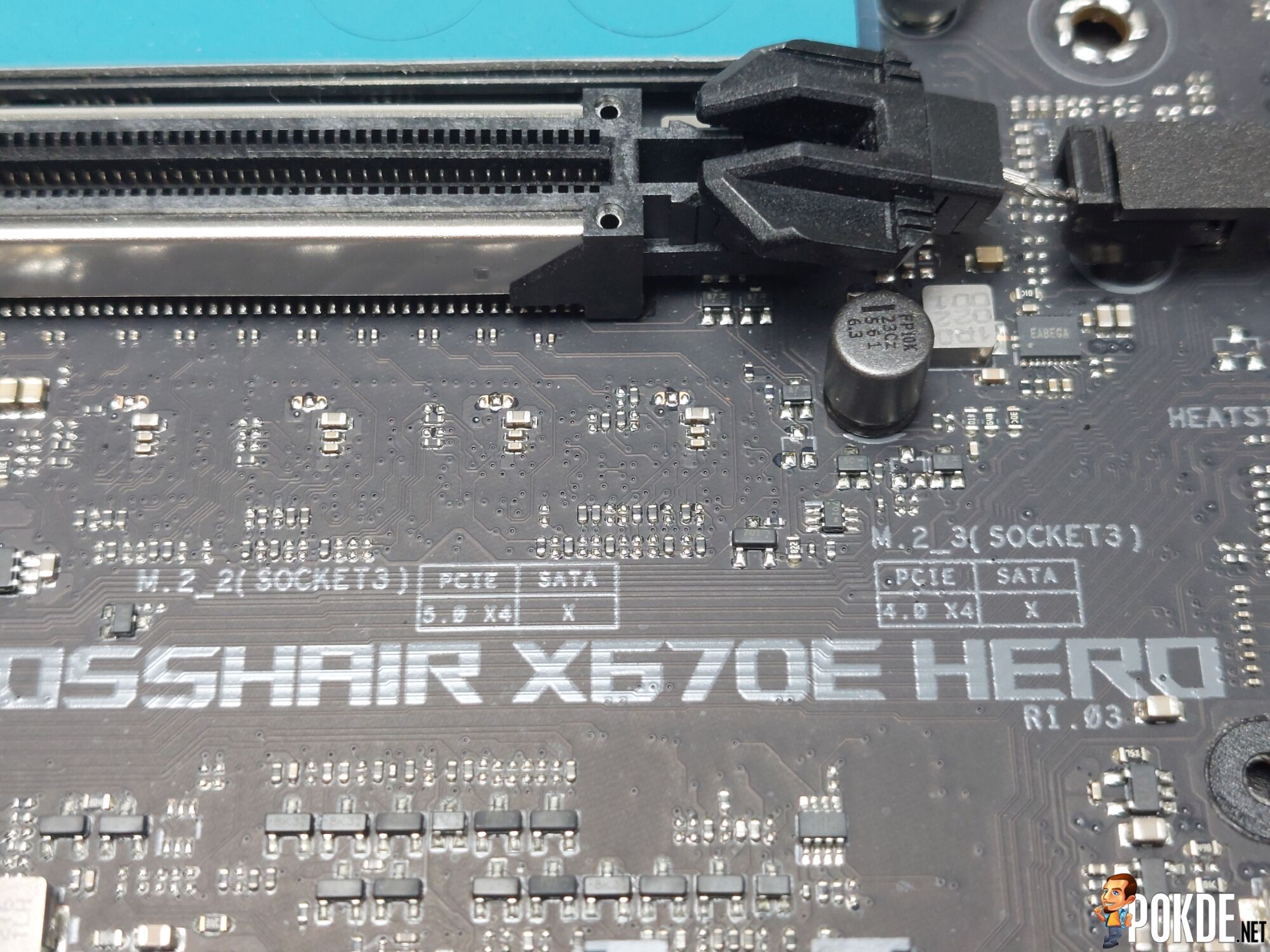

Each PCIe connection has its link speed, x1, x2, x4, x8, x16 and even x32 (this one is extremely rare). The most common ones are x16 slots, usually occupied by GPUs, then x4 belongs to M.2 SSDs and some add-in cards, whereas x1 can be reserved to some USB expansion cards as well. Different link speeds also have different slot length – so x16 will be the longest and x1 is the shortest.

The latest version of the standard currently on the market is PCIe 5.0 – its fifth revision. There’s sixth and even seventh revision on the way, but that’s for the far future so for the purpose of this topic we won’t delve into that. What does each revision does, you may ask – just like other connection standards such as HDMI or DisplayPort, each revision may bring new features that changes the way add-in card behaves (power management, for example), but the most common feature in every PCIe revision is the doubling of bandwidth.

| PCIe Standard | Bandwidth (x4 link) | Bandwidth (x16 link) | Transfer rate per lane |

| PCIe 1.0 | 1 GB/s | 4 GB/s | 2.5 GT/s |

| PCIe 2.0 | 2 GB/s | 8 GB/s | 5 GT/s |

| PCIe 3.0 | 3.94 GB/s | 15.75 GB/s | 8 GT/s |

| PCIe 4.0 | 7.88 GB/s | 31.5 GB/s | 16 GT/s |

| PCIe 5.0 | 15.12 GB/s | 63 GB/s | 32 GT/s |

| PCIe 6.0 (upcoming) | 30.25 GB/s | 121 GB/s | 64 GT/s |

Simply put – PCIe 5.0 x16 is double the bandwidth of PCIe 4.0 x16, which by itself is double the bandwidth of PCIe 3.0 x16, and so on. A PCIe 5.0 x4 slot used by SSDs has the same bandwidth of a full-size PCIe 3.0 x16 slot that GPU runs on just a few years ago!

In The Quest Of Speed

Now all of this double-in-speed does sound like dark magic, but it’s all physics. Cold, hard physics. (Bear with me, lots of science ahead.)

Data is passed through conductors, in this case, copper traces hidden below the motherboard surface. But copper as a material is not perfect – like all materials, it contains some internal electrical resistance, which can degrade signals (we call it “attenuation”); when it gets too degraded, the receiving end will not understand what is sent from the source. Think of it like a bad antenna on your old analog TV – that’s how bad/noisy signal looks like.

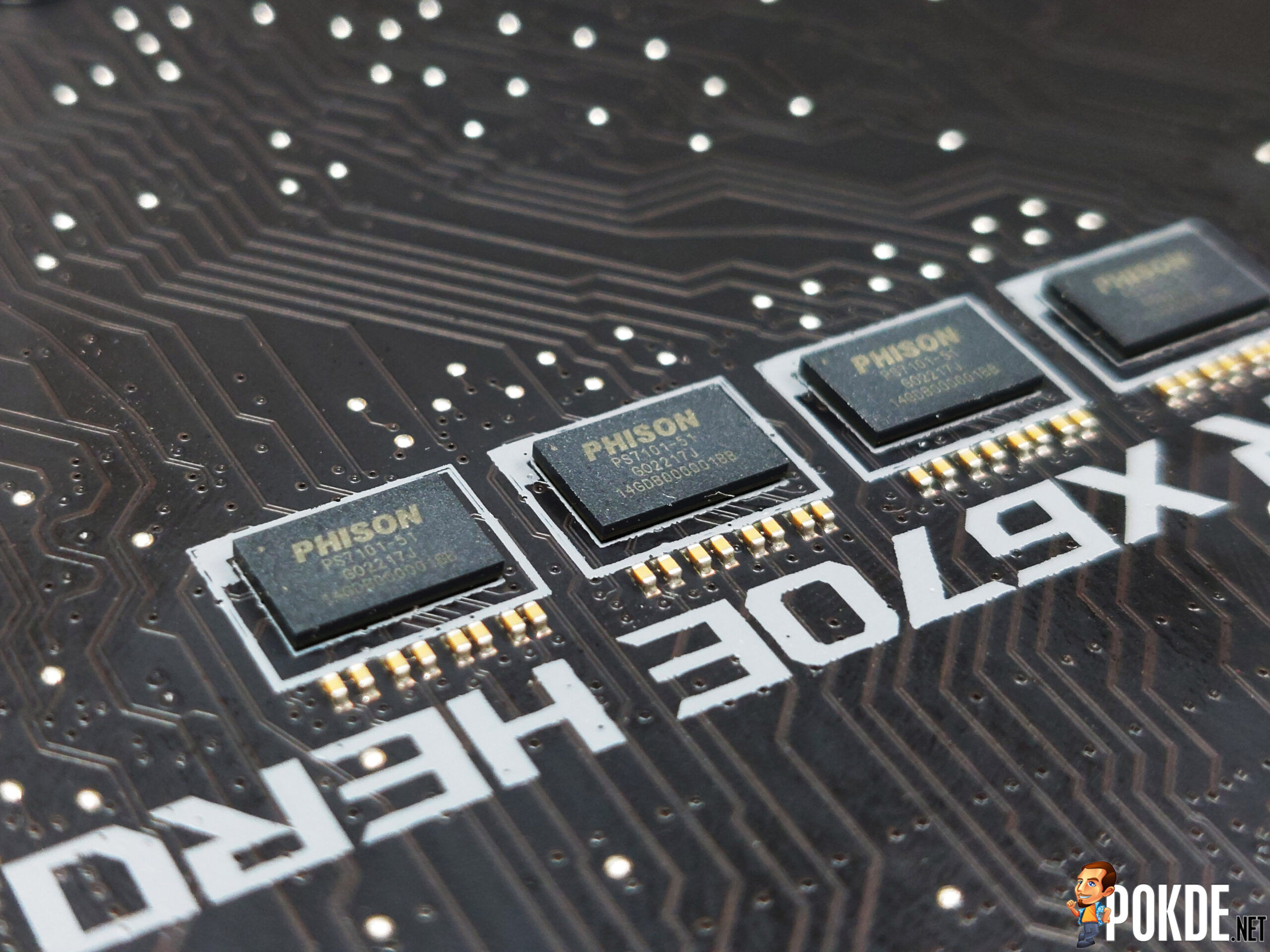

If you’re passing through fairly reasonable speeds over copper, like USB, the signal degradation won’t be a concern until you have cables that are over tens of meters or possibly 100m long; but for something as high speed as PCIe 5.0, the attenuation can kick in really fast – sometimes within centimeters. To combat that, you’d use a chip called re-driver (or re-timer). What it does is it boosts the signal halfway through to ensure the signal quality is good enough to the receiving connection.

On the SSD’s side, the chip that is responsible for their extreme transfer speeds are called ‘controllers’. It usually sits right behind the connectors with DRAM and NAND chips (the chips that actually stores data) further behind. Think of it as a traffic police – it directs the flow of data to DRAM and NAND chips whenever necessary to deliver the highest speeds. In some ways, the controller functions similarly to CPUs, except it’s for a single purpose. What happens when you puts a lot of work on it? It gets hot.

PCIe 5.0 SSDs are in fact so fast it has seemingly started to hit the wall on its maximum sequential throughput (for now) – here’s some napkin math. PCIe 3.0 SSDs often maxes out at 3.5GB/s read/write, and PCIe 4.0 – as we’ve talked earlier, doubles in bandwidth – tops out at 7GB/s. PCIe 5.0 SSDs so far has hit around 10GB/s read/write on average (with some reaching 12GB/s), which is still some way from hitting the theoretical 14GB/s wall the connection can provide.

If they needs to improve on speeds, a better and more efficient chip is most definitely on the books. But that also means using more advanced chips built in more advanced process nodes – and that, increases the BoM (bill-of-materials) cost, which will mostly be passed down to consumers. Same applies to PCIe connectors – all these re-drivers and re-timers needed to deliver the speeds will add cost to the whole motherboard.

DirectStorage: A Case For PCIe 5.0?

So far the realistic use case for PCIe 5.0 is frankly, hard to come by. GPUs are nowhere close to saturating even the PCIe 4.0 x16 connection (even the RTX 3090 barely broke past what PCIe 3.0 x16 could handle), so that leaves the SSDs being the sole type of device on the consumer PC market capable to even fully utilize the high bandwidth it provides. However, most people will tell you that sequential read/write speeds don’t matter – it’s the random read/write performance that users should pay attention to (which do not saturate PCIe bandwidth for the most part).

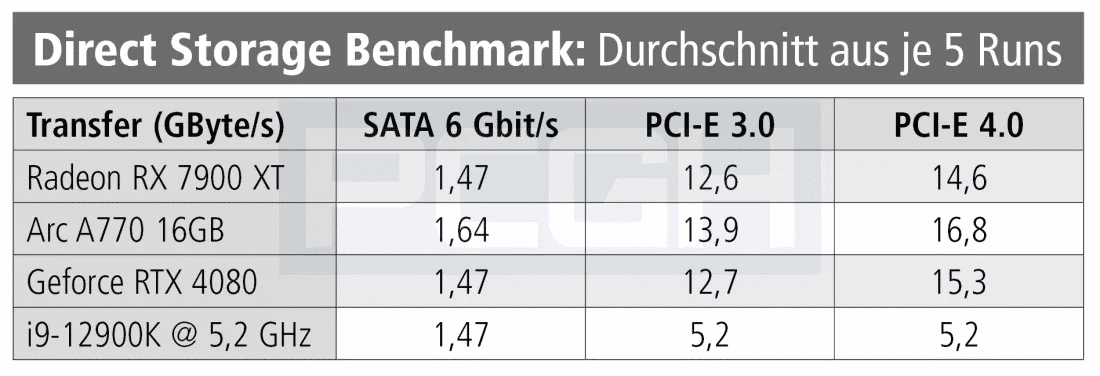

Then comes Microsoft’s DirectStorage – a new tech designed to massively decrease game loading times down to mere single-digit seconds. It works by removing the CPU bottleneck in between SSD and GPUs, which allows SSDs to directly pass game assets and texture data over into GPU’s VRAM at a much higher speeds. Here’s a chart of the maximum throughput clocked in the DirectStorage benchmark test (courtesy of pcgameshardware.de):

On the bottom row is where DirectStorage is completely off – meaning the data is passing through CPU first before it gets to the GPU VRAM. Enabling DirectStorage nets you more than double the throughput even on PCIe 3.0 SSDs, but notice that PCIe 4.0 only gets a small improvement after that. How would affect game loading times? Probably very little in between both M.2 SSDs. Keep in mind DirectStorage has to be actively implemented to the game itself, so old games will not be affected nor improved in terms of loading speeds.

What does that mean for PCIe 5.0 SSDs then? Assuming the improvement is +20% (or +50% if you really want to be generous) over PCIe 4.0, that’s still not a whole lot of improvement to the overall speeds. Remember, at this rate the loading times are already shredded down to as little as two seconds, and just like high refresh rate monitors, we’re entering the territory of diminishing returns very fast – to the point it’s hardly noticeable.

So Who Is PCIe 5.0 Really For?

Last question: if PCIe 5.0 adds monetary costs and heat costs so much so to the point of not getting much in return, then who is PCIe 5.0 really for? The answer is: servers. The fact is, servers are the ones that usually gets the leading edge hardware especially when it comes to storage-related technologies – the pace that datacenters are currently growing are immense. PCIe speeds are especially important for cloud computing based datacenters where lots of virtual machines can hit on a same system (or nodes) at once, saturating the available performance quickly.

In fact, the datacenters are in need for such high speed interconnects, so much so they have made a new standard for it – called CXL (Compute eXpress Link). It mostly functions like a PCIe connection but introduces new protocols for even higher transfer speeds, and some datacenters are utilizing the speed provided to install memory expansion cards – something that requires extremely high access speeds (around 50GB/s peak for DDR5).

As a regular PC user, should you need to get a PCIe 5.0 device in the near future? In virtually all cases, no. Outside of SSDs, no device actually supports PCIe 5.0 standard, not even the RTX 4090. Even SSDs themselves provide very little benefit over PCIe 4.0 or even PCIe 3.0 SSDs where the performance leap over each generation is too small to notice any realistic difference (if you ignore the sequential read/write speeds which are mostly unattainable and is an unrealistic metric by itself).

If you own a SATA SSD right now and are concerned about being left behind on Microsoft DirectStorage – fret not, even a reasonably cheap PCIe 3.0 SSD or PCIe 4.0 SSDs will give you enough speed boosts to mostly equalize the benefits that PCIe 5.0 would’ve brought to the table, without the huge pricing and heatsinks associated with them.

Info source: Ars Technica | @momomo_us (Twitter) | pcgameshardware.de (via TechSpot) | Tom’s Hardware | PC Gamer